The key front end sensors I wish to build for this project are an ELF/VLF radio receiver and a seismometer. The frequency ranges of interest here are < 20kHz, in other words, in the audio range (and probably extending a little lower).

As it happens, in a past life I studied audio frequency electronics, transducers, signals and systems, DSP and so on formally for 3 years, have written articles on (nonlinear) analog electronics for a magazine, and probably more significantly have been an electronic music hobbyist for around 40 years. In short, I consider myself something of an expert in the field. The word for this is hubris.

I started planning the sensors like a bull at a gate. On the seismic side, I hadn’t really thought things through very well. On the radio side – I’d only really skimmed Radio Nature, and my knowledge of radio reception is minimal. Since then, the flaws in my ideas have poured out.

Seismic Errors

I’ve got a design for seismic signal sensors roughed out. While a magnet & coil is a more traditional way of detecting audio frequency deflections, I thought it would be neater somehow to use semiconductor Hall Effect devices. A standard design for a proximity detector is one of these components (which are housed much like transistors) backed by a magnet. When a something like a piece of iron passes by, the magnetic flux varies and hence the output of the device (linear output devices are available).

So for my seismometer, the moving part will be a steel ball bearing on a spring, hanging in a jar of oil (for damping). There will be 3 sensors located in the x, y & z directions (N-S, E-W, up-down) relative to this.

One potential complication with this setup had occurred to me. For a (relatively) linear response, the ball bearing would have to move in line with the face of the sensor. Obviously, in practice, most of the time the movement will be off-axis. However, my thinking went, there should still be enough information coming from all 3 sensors in combination to potential determine the deflection of the ball bearing. The data produced by these sensors will ultimately go into a neural network system, and they’re good at figuring out peculiar relationships.

But I’d missed another potential source of problems, it only came to me a couple of days ago. There is likely to be significant, pretty complex, interaction between the ball bearing and all 3 magnets. Whether or not this additional complication will be such that that the directional seismic information is totally obfuscated remains to be seen. I plan to experiment, maybe I’ll need 3 independent sensors…

Loopy

A little learning is a dang’rous thing. The danger I faced was wasting time & money in building a VLF receiver that simply couldn’t work.

I’d only skimmed the material, but something about the use of a coil as a receiver appealed to me. But the designs I’d seen were all pretty big, say around 1m in diameter. Hmm, thinks I, why not shrink that down to say 30cm and just boost the gain of the receiver circuit. It was only after I’d wound such a coil and picked up nothing but hum & noise that I got around to reading a little more.

It turns out there are two related issues involved: the way a small (relative to wavelength) loop antenna works isn’t exactly intuitive, and also its output is very low. It’s frequency-dependent, but the level of the desired signal is at a similar order of magnitude as the thermal noise generated by the loop, less than that of many op amps. The good Signore Romero, author of Radio Nature, has a practical discussion of this in his description of A Minimal ELF Loop Receiver. (Being at the low end of the frequency range of interest make this rather a worst-case scenario, but the points still apply). Basically there’s a good reason for having a big coil.

Another possible design flaw coming from my lack of learning is that I initially thought it would make sense to have coils in the x, y & z dimensions. As it turns out, because VLF signals are propagated as ground waves (between the surface of the planet and the ionosphere), pretty much all a coil in the horizontal plane will pick up is local noise such as mains hum. But I’m not yet discarding the inclusion of such a loop. Given the kind of neural net processing I have in mind, a signal that is comprised of little more than local noise may well be useful (in effect subtract this from the other signals).

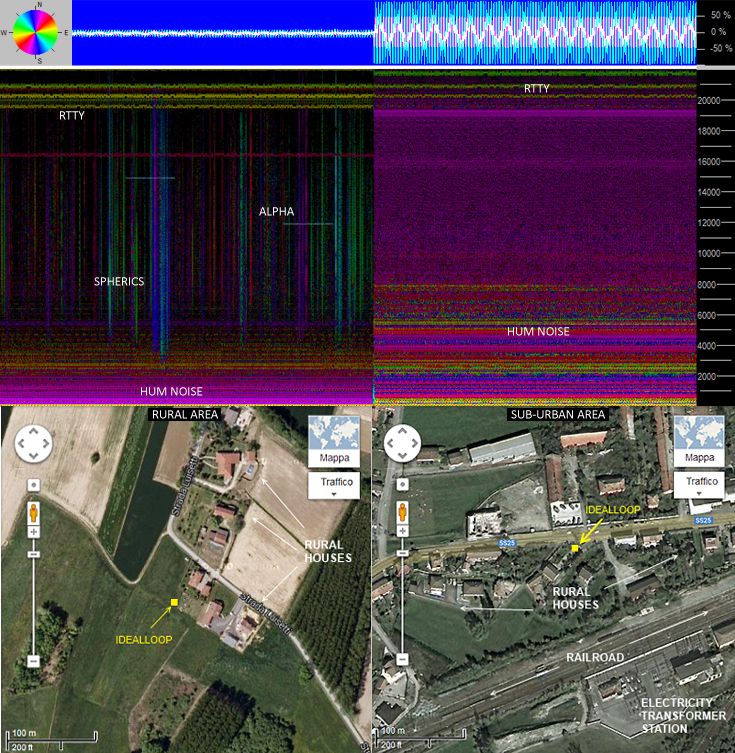

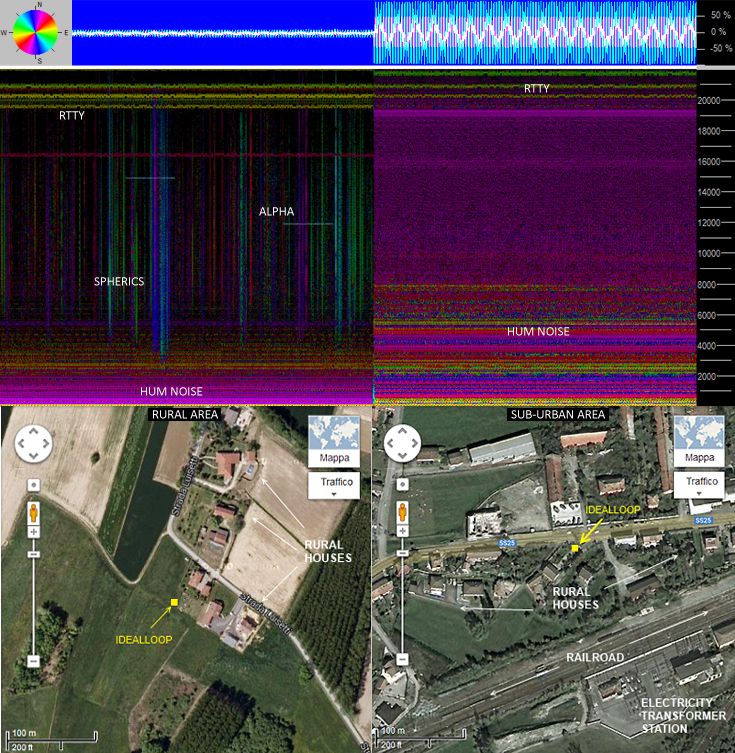

But even having said all this, a loop antenna may still be of no use for me here – Noise Annoys. Renato has an image that nicely sums up the potential problem:

Right now I don’t have the funds to build a loop antenna of any description (big coils use a lot of wire!) but as and when I can, I’ll probably be looking at something along the lines of Renato et al’s IdealLoop (the image above comes from that page).

I do have the components to put together some kind of little portable whip antenna (electric field) receiver, I think I’ll have a look at that next, particularly to try and get an idea of how the noise levels vary in this locale.

I’ve also got one linear Hall effect sensor, so I can have a play around with that to try and get some idea of my seismometer design’s viability.